Source attribution study findings, part 2

This is part two of our source attribution study findings series.

This is part two of our source attribution study findings series. Read part one here.

As I sifted through the responses from participants, it became clear that while the findings of my study were surprising, they were also very nuanced. The open-ended feedback provided deeper insight into the psychology behind how readers engage with articles and how different aspects of sourcing, tone, and scientific detail influence their trust. There were several recurring themes, and I found myself grappling with new questions about how I might refine my understanding of trust in scientific reporting.

One of the most consistent points across responses was the concern over articles that lacked citations. Readers repeatedly expressed discomfort with the absence of formal sourcing, with many stating things like, "I did question that there were no citations," or "Need citations, & some summary stats." It became apparent that while the lack of formal attribution didn't always lead to a complete rejection of the article, it did raise red flags. The absence of citations seemed to challenge the article's academic rigor, leaving readers uncertain about the credibility of the claims being made.

This matches my earlier hypothesis that missing citations may lead some to question the reliability of the content, but it also offers an intriguing layer of complexity. In some cases, the lack of citations may make the article feel more approachable and independent, which could explain why it still scored relatively higher than expected. However, this doesn’t mean readers were fully comfortable with the content—they simply felt less conflicted about it than they would have if it were overly formalized with extensive references.

Another takeaway came from the responses surrounding the use of quotes from unaffiliated experts. Participants were largely receptive to direct quotes from researchers who were directly involved with the study, stating that they trusted these more. However, when the article featured conclusions or opinions from experts not affiliated with the research, many respondents expressed distrust, saying things like, "Trust: direct quotes from associated researchers. Distrust: conclusion provided by someone unaffiliated with the project."

It seems that affiliation matters more than I initially anticipated. If a quote comes from someone not directly involved in the study, it could give readers the impression that the information is being filtered or diluted. This finding adds another layer to the idea that trust is intricately linked to transparency—not just in the sources but in how deeply those sources are connected to the research in question.

The tone of the article also played a significant role in shaping trust, particularly when it came to the level of detail provided. Many respondents felt that a more conversational tone was both a blessing and a curse. One participant mentioned, "It read more like a conversation and I would have wanted more details about their study," while another stated, "I would’ve liked more detail on the mechanism by which pollutants mask smell, and more context for the first scientist quoted."

The casual, oversimplified presentation of the article appeared to make some readers feel disconnected from the science itself, leaving them longing for more technical depth. But this wasn’t a universal response—others seemed to appreciate the approachability of the article. The key takeaway here is that while simplicity can draw readers in, it can also make them feel like something is being left out, especially when dealing with a scientific topic.

Another feedback theme that stood out was the importance of clear methodology. Several participants commented on the lack of clarity about the study's methodology, especially regarding the use of fake flowers. One participant noted, "The article mentioned field and lab tests, but did not delineate which were the field or lab tests, and what each specifically found." This lack of explanation left readers skeptical, especially when they weren’t clear on how the study's experiments were set up and executed.

It’s clear that when reporting on scientific findings, the methodology is just as crucial as the findings themselves. Without a clear understanding of how the research was conducted, readers are left to question the reliability of the conclusions. The takeaway here is that even the most rigorous findings can be undermined by the lack of clarity about how those findings were obtained.

A related concern was the absence of data visualizations and summaries of key findings. One respondent remarked, "Cogently, clearly written. BUT… where are the citations? Or a graph or table with a summary of key data?" This feedback echoes the general consensus that while the writing style may be coherent, the article could have benefited from more concrete scientific evidence, such as data tables or figures, to support its claims.

This made me realize that, in the eyes of many readers, data visualizations aren’t just supplementary—they’re essential for making an article feel grounded in empirical evidence. Including a visual summary would not only enhance the scientific rigor of the article but could also make the findings feel more tangible and credible.

Perhaps one of the most telling pieces of feedback came from respondents who noted the absence of study limitations. One participant commented, "There was also no mention of the limitations of the study, which made me feel like the findings were being overstated." This struck me as a pivotal point—acknowledging limitations in scientific reporting is not just a formality; it’s a vital part of building trust with readers.

Without a mention of limitations, the article risked coming across as overly confident in its findings, which in turn made some readers more skeptical. It reinforced the notion that trust isn't just about presenting the facts but also about showing humility and transparency regarding the constraints of the research. Omitting such details could make readers feel like the study’s findings were being presented as more definitive than they actually were.

Finally, the lack of a publication reference also played a role in shaping trust. One respondent pointed out, "It's partly dependent on the publication I'm reading and also I am now reading from the mind of the part of the population that doesn't believe in science at all." It’s a reminder that trust isn't built in a vacuum—but rather also influenced by the broader context of the article's source and the reader's personal beliefs.

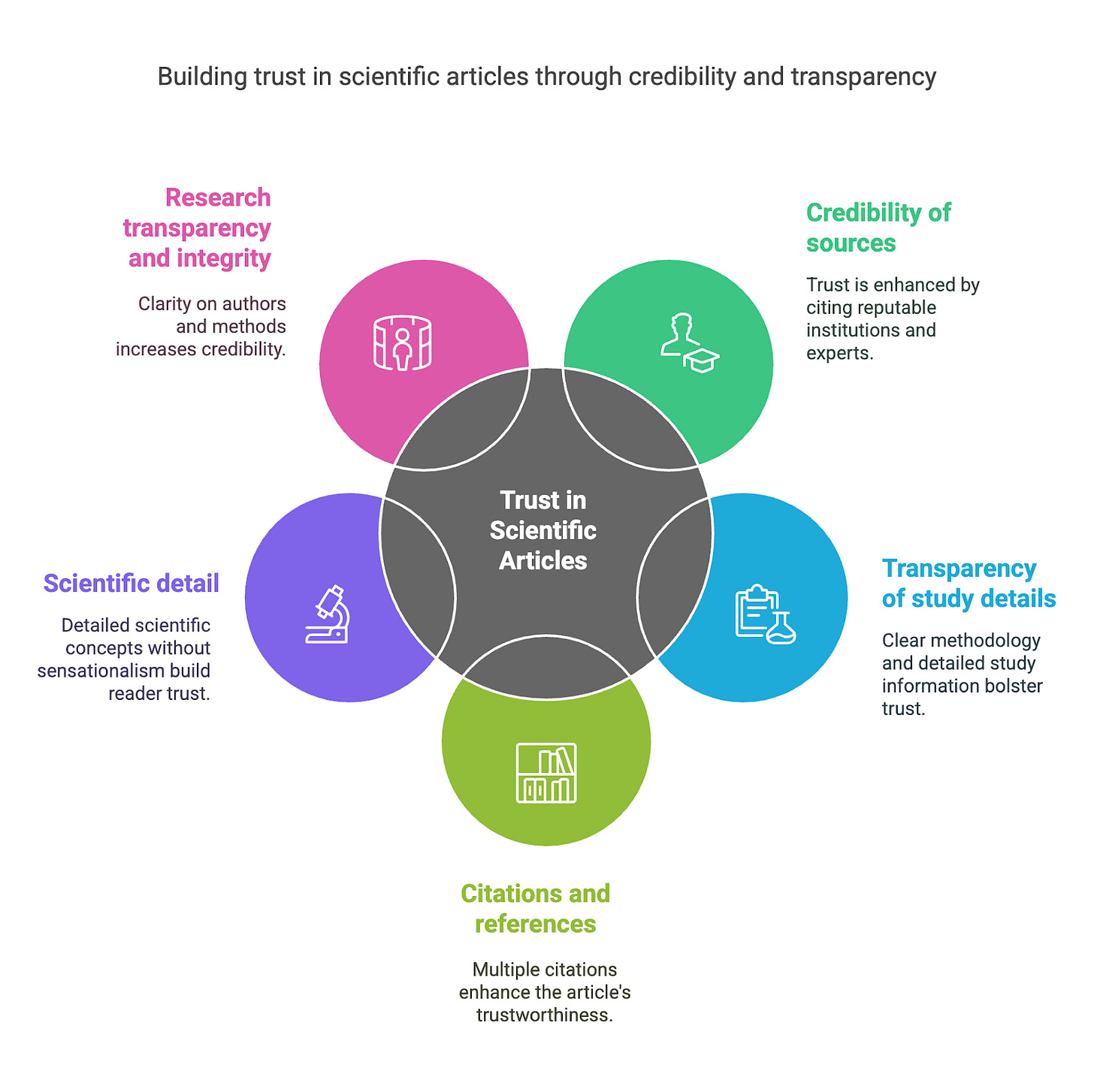

Looking back on the findings, I’m left with a much more complex picture of how trust operates in scientific reporting. It's clear that trust is not just about source attribution—it's about the quality and context of that attribution, the tone of the writing, the clarity of the methodology, and the overall transparency of the article.